PCIe RAID Results

…so after some more communication with ASUS, we were told that for PCIe RAID to work properly, we *must* flip this additional switch:

As it turns out, the auto setting is not intelligent enough to pick up on all of the other options we flipped. Do note that switching to the x4 setitng disables the 5th and 6th ports of the Intel SATA controller. What is happening on an internal level is that the pair of PCIe lanes that were driving that last pair of SATA ports on the Intel controller are switched over to be the 3rd and 4th PCIe lanes, now linked to PCIe_3 on this particular motherboard. Other boards may be configured differently. Here is a diagram that will be helpful in understanding how RST will work on 170 series motherboards moving forward:

The above diagram shows the portion of the 20 PCIe lanes that can be linked to RST. Note this is a 12 lane chunk *only*, meaning that if you intend to pair up two PCIe x4 SSDs, only four lanes will remain, which translated to a maximum of four remaining RST-linked SATA. Based on this logic, there is no way to have the six Intel SATA ports enabled while also using a PCIe RAID. For the Z170 Deluxe configuration we were testing, the center four lanes were SATA, M.2 uses the left four, and PCIe slot 3 uses the right four. Note that to use those last four, the last two Intel SATA ports are disabled. The Z170 Deluxe does have eight total SATA ports, so the total dropped to six, but only four of those are usable with RST in a RAID configuration.

Once we made x4 change noted above, everything just started working as it should have in the first place (even booting from SATA, which was previuously disabled when the PCIe-related values were not perfectly lined up). Upon booting off of that SATA SSD, we were greeted with this when we fired up RST:

…now there's something you don't see every day. PCIe SSDs sitting in a console that only ever had SATA devices previously. Here's the process of creating a RAID:

You can now choose SATA or PCIe modes when creating an array.

Stripe size selection is similar to what has been in prior versions. I recommend sticking with the default that RST chooses based on the capacity of the drives being added to the array. You can select a higher stripe size at the cost of lower small file performance. I don't recommend going lower than the recommended value, as it typically results in reduced efficiency of the RST driver (the thread handling the RAID pegs its thread / memory allocation just handling the lookups), which results in a performance hit on the RAID.

With the array created, I turned up all of the caching settings (only do this in production if you really need the added performance, and only if the system is behind a UPS). I will also be doing this testing with TRIMmed / empty SSD 750's. This is normally taboo, but I want to test the maximum performance of RST here – *not* the steady-state performance of the SSDs being tested.

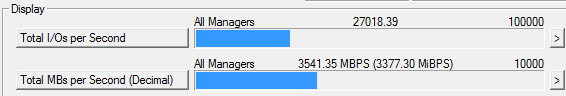

The following results were attained with a pair of 1.2TB SSD 750's in a RAID-0, default stripe size of 16KB.

Iometer sequential 128KB read QD32:

Iometer random 4K read QD128 (4 workers at QD32 each):

ATTO (QD=4):

For comparison, here is a single SSD 750, also ATTO QD=4:

So what we are seeing here is that the DMI 3.0 bandwidth saturates at ~3.5 GB/sec, so this is the upper limit for sequentials on whichever pair of PCIe SSDs you decide to RAID together. This chops off some of the maximum read speed for 750's, but 2x the write speed of a single 750 is still less than 3.5 GB/sec, so that gets a neat doubling in performance for an effective 100% scaling. I was a bit more hopeful for 4K random performance, but a quick comparison of the 4K results in the two ATTO runs above suggest that even with caching at the maximum setting, RST pegged at the 4K random figure equal to a single SSD 750. Testing at stripe sizes less than the default 16K did not change this result (and performed lower).

The takeaway here is that PCIe RAID is here! There are a few tricks to getting it running, and it may be a bit buggy for the first few BIOS revisions from motherboard makers, but it is a feature that is more than welcome and works reasonably well considering the complications Intel would have had to overcome to get all of this to perform as well as it does. So long as you are aware of the 3.5 GB/sec hard limit of DMI 3.0, and realize that 4K performance may not scale like it used to with SATA devices, you'll still be getting better performance than you can with any single PCIe SSD to date!

Hey guys what’s the version

Hey guys what’s the version of intel rapid storage technology that you have installed and were did you get it am not seeing the options shown as yours.

My setup ASUS Z170-DELUXE xp951(512GB) and intel 750 1.2TB drives

I created raid0 intel 750+Xp951.Capacity lowered 953GB

Able to see the drives on raid0 during install but capacity (953GB)lowered why?

W10 install went smoothly but does not boot tried various changes unable to boot.

Can I have the Bios settings to make UEFI boot with raid0.

Thanks for your article.

2 members of a RAID-0 array

2 members of a RAID-0 array must contribute

the exact same amount of storage to that array,

hence — as summarized in a reply above —

RST allocated only 512GB (unformatted) from

the Intel 750, as follows:

512GB (xp951) + 512GB (Intel 750) = 1,024 GB unformatted

Using a much older version of Intel Matrix Storage Console,

it reports 48.83GB after formatting a 50GB C: partition on

2 x 750GB WDC HDDs in RAID-0.

Using that ratio, then, we predict:

48.83 / 50 = .9766 x 1,024 = 1,000.03 GB (formatted)

You got 953GB == close enough, considering that we

are looking at entirely new storage technologies here,

and later operating systems will default to creating

shadow and backup partitions, which explain the

differences between 1,000 and 953.

Hope this helps.

MRFS

MRFS

Did you format with GPT or

Did you format with GPT or MBR?

I seem to recall that UEFI booting requires a GPT C: partition.

Please double-check this, because I’ve only formatted

one GPT partition in my experience (I’m still back at XP/Pro).

Allyn, if you are reading this, please advise.

THANKS!

MRFS

capacity lowered to match the

capacity lowered to match the smaller drive,anyone able to boot windows from a raid 0 pcie setup?

[url]https://pcper.com/rev

[url]https://pcper.com/reviews/Storage/Intel-Skylake-Z170-Rapid-Storage-Technology-Tested-PCIe-and-SATA-RAID/PCIe-RAID-Resu[/url]

Samsung SM951 128GB AHCI can work in raid 0 X 3 disk ?

if i buy 3 disk like that

[url]http://www.amazon.com/Samsung-SM951-128GB-AHCI-MZHPV128HDGM-00000/dp/B00VELD5D8/ref=sr_1_3?s=pc&ie=UTF8&qid=1439745389&sr=1-3&keywords=Samsung+SM951[/url]

what speed can reach ?

what is the difference between Samsung SM951 vs SSD 750 ?

I am buying a gigabyte g1

I am buying a gigabyte g1 gaming board and it has 2 m.2 slots on the board and says it can do raid0 if I put 2 sm951 256 gb m.2 cards on board should I be able to raid 0 and also boot and how would I set it up I was also planning on a 2 tbhdd for storage any thoughts

Good luck just returned my

Good luck just returned my G-1 gaming could not get CSM disabled to stay and never got the Intel raid setup to appear in the Bios.

This was just a fine article,

This was just a fine article, advanced and I enjoyed reading it. Thanks

Way back, I was one of the first to run bootable dual SSD`s in Raid 0 with an LSI raid controller. This month I hope to purchase the new Samsung 950 Pro V-NAND M.2.

I want to run a pair in Raid O.

But, and I quote the author: So what we are seeing here is that the DMI 3.0 bandwidth saturates at ~3.5 GB/sec, so this is the upper limit for sequentials on whichever pair of PCIe SSDs you decide to RAID together.

Since the new Samsungs are in broad terms:

Sequential Read 2,500 MB/s

Sequential Write 1,500 MB/s

Ergo, running dual Samsung 950 Pro V-NAND M.2 would have a theoretical sequential of 5 MBs, thus surpassing the bandwidth of DMI 3.0 by 1.5 MBs.

Question:

Even if a hardware raid controller is used, it will be limited to DMI bandwidth?

I take it there is no workaround for this bottleneck at the moment…

> Even if a hardware raid

> Even if a hardware raid controller is used, it will be limited to DMI bandwidth?

Only if it is connected to a PCIe slot behind DMI.

hi Allyn

( sorry for my bad

hi Allyn

( sorry for my bad english)

i’m trying to install 2 sm951 nvme in radi 0 on my sabertooth x99 and i have some difficult to do.

the ssd is installed one in m.2 native slot and one in a pcie adaptor in the second slot.

bios see both.

i can install os in both.

i try some changes in bios like your article.

i try to modding bios file to update with version 14 of intel rst driver ( in fact in the advanced mode of bios in intel rapid storage flag it shown version 14 but no disc is detected)

in the nvme configuration they are listed

bus 3 sm951…..

bus 5 sm951…..

can you help me in some way?

thanks

parrotts

Allyn,

By implementing Raid 0

Allyn,

By implementing Raid 0 on a ASRock Z170 Extreme7 using 3 x Samsung 950 Pro V-NAND M.2 (upcoming) inserted into the three available Ultra M.2 ports (which are connected directly to the CPU, i.e not behind a chipset with DMI 3.0 limit of 3.5 GB/sec), wouldn’t it be possible to achieve about 7.5GB/s sequential read?

As it turns out the Intel 750

As it turns out the Intel 750 was tested by some folks at ASUS with a sequential read of over 2.7 GB/s read and 1.3 GB/s write. Who needs anything else? I don’t think Samsung will be as fast, and it’s late to the market.

October 14, 2015 | 09:41 PM –

October 14, 2015 | 09:41 PM – Posted by Md (not verified)

Hi Allyn,

Follow up simple question, please. Can you give, or direct me to, steps to installing Windows 7 Pro onto SM951 installed on m.2 slot of Asus Z-170-A mobo as boot drive?

Have read endless threads on problems and still not clear on how to.

So far your thread is great…but not seeking raid…just booting off small with windows 7..

Thanks in advance..

Hi, do you try to install in

Hi, do you try to install in a normal sata ssd, then you clone to sm951?

Hi,

If I understand correctly

Hi,

If I understand correctly the max bandwidth over the dmi 3.0 Z170 sata express connector is 3.5 Gb/s? What about on the X99 chipset? Is it also possible to raid m.2 on X99? And why do motherboard manufactures advertise the speed limit at 32Gb/sec?

Confused.

Thanks

Hi Ryan, I have some question

Hi Ryan, I have some question with the Raid 0 Configuration of NVME M.2 SSD’s.. If I make a RAID 0 set up for 2 NVME SSDs running on 2 PCIe 3.0 X4, is the DMI 3.0 be sufficient enough to carry such bandwidth? From what I know, One PCIe 3.0 x4 is equal to 32Gbps.. So in RAID 0, it will be 64 Gbps yes?

I really appreciate the

I really appreciate the testing you have done here. I built a z170 deluxe, pcie 750 ssd, GTX 980ti and called intel for support after I buggered up my UEFI settings. My support guy couldn’t find any answers (i’m sure he was googling everything I had did) but having him on the line gave me the courage to forge ahead and finally got the drive back and working. I do think I have a ways to go though. I only have two SATA ports available now. SATA 3,4. In reading your posts I believe I forgot to go back and disable Hyperkit. Obviously Hyperkit isn’t needed for a drive installed in the PCIe slot 3. Doh!

Keep up the good work

For those of you wanting to

For those of you wanting to do m.2 RST SSD caching, to get the drive to show up just change the boot option as mentioned in the article. Thank you guys so much for finding and sharing these settings!!

Thanks guys, the diagram on

Thanks guys, the diagram on the next page on the lanes helped me a lot, have been struggling to get my PCIe raid running along side a SATA one, now I understand why but see how I can do it