FreeSync and Frame Pacing Get a Boost

AMD has more than just a UI change in store for Radeon users with the new Crimson Edition of Radeon Software.

Make sure you catch today's live stream we are hosting with AMD to discuss much more about the new Radeon Software Crimson driver. We are giving away four Radeon graphics cards as well!! Find all the information right here.

Earlier this month AMD announced plans to end the life of the Catalyst Control Center application for control of your Radeon GPU, introducing a new brand simply called Radeon Software. The first iteration of this software, Crimson, is being released today and includes some impressive user experience changes that are really worth seeing and, well, experiencing.

Users will no doubt lament the age of the previous Catalyst Control Center; it was slow, clunky and difficult to navigate around. Radeon Software Crimson changes all of this with a new UI, a new backend that allows it to start up almost instantly, as well as a handful of new features that might be a surprise to some of our readers. Here's a quick rundown of what stands out to me:

- Opens in less than a second in my testing

- Completely redesigned and modern user interface

- Faster display initialization

- New clean install utility (separate download)

- Per-game Overdrive (overclocking) settings

- LiquidVR integration

- FreeSync improvements at low frame rates

- FreeSync planned for HDMI (though not implemented yet)

- Frame pacing support in DX9 titles

- New custom resolution support

- Desktop-based Virtual Super Resolution

- Directional scaling for 2K to 4K upscaling (Fiji GPUs only)

- Shader cache (precompiled) to reduce compiling-induced frame time variance

- Non-specific DX12 improvements

- Flip queue size optimizations (frame buffer length) for specific games

- Wider target range for Frame Rate Target Control

That's quite a list of new features, some of which will be more popular than others, but it looks like there should be something for everyone to love about the new Crimson software package from AMD.

For this story today I wanted to focus on two of the above features that have long been a sticking point for me, and see how well AMD has fixed them with the first release of Radeon Software.

FreeSync: Low Frame Rate Compensation

I might be slightly biased, but I don't think anyone has done a more thorough job of explaining and diving into the differences between AMD FreeSync and NVIDIA G-Sync than the team at PC Perspective. Since day one of the G-Sync variable refresh release we have been following the changes and capabilities of these competing features and writing about what really separates them from a technological point of view, not just pricing and perceived experiences.

One disadvantage that AMD has had when going up against the NVIDIA technology was prominent at low frame rates. AMD has had "variable refresh rate windows" that have varied from a narrow 48-75Hz (our first 21:9 IPS panel) to a much wider 40-144Hz (TN 2560×1440). When you went above or below that refresh rate with your game render rate (frame rate) you would either revert to a non-variable refresh rate state of either having V-Sync enabled or V-Sync disabled, at the users selection. The result, when your frame rate when below that minimum VRR range, was an experience that was sub-par – you either had tearing on your screen or judder/stutter in the animation. Those were the exact things that VRR technology was supposed to prevent!

NVIDIA G-Sync solved the problem from the beginning by doing something called frame doubling – when your in-game FPS hit that low VRR window range, it would double the frame rate being sent to the screen in order to keep the perceived frame rate (to the monitor at least) high enough to stay inside the range. That gave us no effective minimum variable refresh rate range for NVIDIA G-Sync monitors – and for users that really wanted to push the fringe of performance and image quality settings, that was a significant advantage in nearly all gaming environments.

While NVIDIA was able to handle that functionality in hardware thanks to the G-Sync module embedded in all G-Sync displays, AMD didn't have that option as they were piggy-backing on the Adaptive V-Sync portion of the DisplayPort standard to get FreeSync working. The answer? To implement frame doubling in software, a feature that I have been asking for since day one of FreeSync's release on the market. AMD's new Radeon Software Crimson is the company's first pass at it, with a new feature called Low Frame Rate Compensation (LFC).

The goal is the same – to prevent tearing and judder when the frame rate drops below the minimum variable refresh rate of the FreeSync monitor. AMD is doing it all in software at the driver level, and while the idea is easy to understand it's not as easy to implement. An algorithm monitors the draw rate of the GPU (this is easy from internal trackers) and when it finds that it is about to cross the VRR minimum of a monitor, double the frame rate being sent from the GPU to the monitor. That "doubled" frame is a duplicate, with no changed data, but it prevents the monitor from going into a danger zone where screen flicker and other bad effects can occur. For a visual representation of this behavior (in hardware or software) look at the graph below (though it uses data from before AMD implemented LFC).

There are a couple of things to keep in mind with AMD's LFC technology though. First, it is automatically enabled on supported monitors and there is no ability to turn the feature on or off manually. Not usually a big deal – but I do worry that we'll find edge cases where LFC will affect game play negatively, and having the ability to turn this new feature off when you want would help troubleshooting at the very least.

Also, AMD's LFC can only be enabled on monitors in which the maximum refresh rate is 2.5x (or more) higher than the minimum variable refresh rate. Do you have a monitor with a 40-144Hz FreeSync range? You're good. Do you have one of the first 48-75Hz displays? Sorry, you are out luck. AMD has a wide range of FreeSync monitors on the market today and they don't actively advertise that range, so you won't know for sure without reading other reviews (like ours) if your monitor will support Low Frame Rate Compensation or not – which could be a concern for buyers going forward. 4K FreeSync monitors which often have a ~32Hz to 60Hz range will not have the ability to support LFC, which is unfortunate as this is one key configuration where the feature is needed! (As another aside, this might explain why NVIDIA has been more selective in its panel selection for G-Sync monitors; even though they frame double in hardware they still need at least ~2x between the minimum and maximum refresh rates.)

I have spent the last couple of days with the new Crimson driver and tested Low Frame Rate Compensation with several FreeSync monitors including the ASUS MG279Q, the ASUS MG278Q, the Acer XR341CK and the LG 29UM67. Of those four displays we have in-house, only the LG 29UM67 has a FreeSync range that is outside the support criteria for LFC and as I expected, the monitor behaved no differently with the Crimson driver than it did with any software before it. All three of the other displays worked though, including the 35-90Hz range on the MG279Q, the 40-144Hz on the MG278Q and the 30-75Hz range on the UltraWide Acer XR341CK.

Testing the ASUS MG278Q with Low Frame Rate Compensation. Nevermind the cable clutter!

Using an application we have internally to manually select a frame rate, I was able to bring the monitors below those previously tested ranges and the LFC behavior seemed to be working as expected. With the V-Sync setting in the game off, moving to 30 FPS on the ASUS MG279Q did not result in screen tearing of any kind. I was able to go all the way down to 10 FPS and didn't see any kind of visual artifacting as a result of the frame doubling. I duplicated this process with the other two monitors as well – both passed.

Of course I ran through some games as well including Grand Theft Auto V where I could easily adjust settings to get frame rates below the VRR range of the monitors on our Radeon R9 380X test bed. The game was able to move in and out of the variable refresh rate minimum without tearing or judder that would have previously been a part of the FreeSync experience.

That's great news! But there were a couple of oddities I need to report. At one point, with both a Radeon R9 295X2 and the R9 380X, the ASUS MG279Q exhibited some extreme flickering when in the variable refresh rate range and below. This kind of flicker reminded me of the type we saw on older G-Sync monitors when hitting an instantaneous 0 FPS frame rate – the frame rate doubling was influencing the brightness of the screen to a degree that we could pick it up just looking at it. I am not sure if this flickering we saw is an issue with the driver or with the monitor but I only saw it 2-3 times in my various reboots, monitor swaps and GPU changes.

I will also tell you that the smoothness of the doubled frame rate with AMD FreeSync and LFC doesn't feel as good as that on our various G-Sync monitors. I am interested to get more detail from AMD and NVIDIA to see how their algorithms compare on when and how to insert a new frame – my guess is that NVIDIA has had more time to perfect its module logic than AMD's driver team has and any fringe cases that crop up (Are you just on the edge of the VRR range? How long have you been on that edge? Is the frame rate set to spike back up above it in the next frame or two?) aren't dealt with differently.

Despite those couple of complaints, my first impression of LFC with the Crimson driver is one of satisfied acceptance. It's a great start, there is room to improve, but AMD is listening and fixing technologies to better compete with NVIDIA as we head into 2016.

Frame Pacing: Legacy DX9 Support

It's been nearly three years since we first published our full findings on frame pacing and how it affects gaming experiences in multi-GPU CrossFire gaming configurations. Frame Rating has been our on-going project in that regard ever since and AMD was able to again make significant strides in that area from where it began. When AMD first delivered a frame pacing enabled driver for DX10 and DX11 games in August of 2013, they promised that "phase 2" would include support for DX9 in the future. Well, nearly 2.5 years later, I present to you, phase 2.

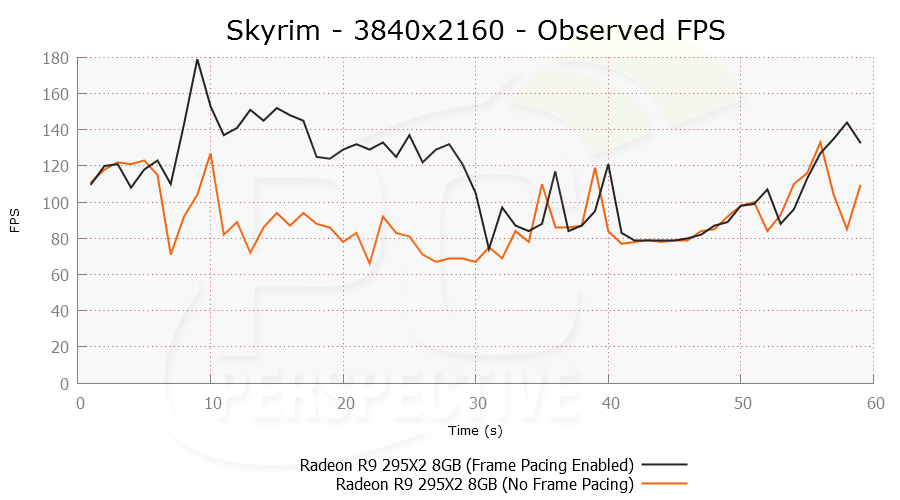

This is Skyrim, the last remaining DX9 title that we use in our tests (most of the time), running at 3840×2160 (4K) on the Radeon R9 295X2 dual-Hawaii graphics card. The orange line is a result from an older driver, before DX9 frame pacing was enabled and you can clearly see the effect that AMD's older driver architecture had on gaming experience. That ugly blob of orange on the Frame Times graph shows you the "runt" frames that the GPUs were rendering but not displaying in an even manner, artificially raising performance results and making the gamer's experience a stuttery mess.

The black line is what we tested with the new Radeon Software Crimson driver – a much more polished experience! Frame pacing is 1000x better than it was before and you can just FEEL the difference when playing the game. Actual user experienced average frame rates jumped from ~90 FPS to ~130 FPS which is a nice improvement at the 4K resolution. Frame time variance on the 95th percentile drops from from about 7ms to 2ms showing in data what playing the game for yourself does – its just so much better than it was before.

This DX9 frame pacing improvement should work across all compatible Radeon GPUs and all DX9 gaming titles, though it's more than fair to say that the number of DX9 games that need CrossFire multi-GPU support is low at this point. I'm glad to see it in there – but it's impact on sales and user goodwill is probably minimal as we hit Thanksgiving of 2015.

Closing Thoughts

These are just a couple of the new features found in the AMD Radeon Software Crimson Edition release. I would unequivocally recommend to users of Radeon GPUs to download and give this new software a download and try it out. It's faster, easier to use, implements performance enhancements for new PC games and makes nearly every facet of the Radeon user experience better.

The formation of Raja Koduri's new Radeon Software Group is just starting but I am impressed with the first new release under his watch. AMD will need more than Crimson to compete with NVIDIA in 2016 though but I have more confidence than ever that it can be done. And I think 2015 still holds a couple of interesting surprises for us to discover together.

Caught something…

This is

Caught something…

This is Skyrim, the last remaining DX9 title that we use in our tests (most of the time), running at 3840×2160 (4K) on the Radeon R9 295X2 dual-Hawaii graphics card.

That said, I can’t wait to get home and try this out.

Whoops! Wishful thinking I

Whoops! Wishful thinking I guess. 🙂

“This is Skyrim, the last

“This is Skyrim, the last remaining DX9 title that we use in our tests (most of the time), running at 3840×2160 (4K) on the Radeon R9 295X2 dual-Fiji graphics card.”

Dual-Hawaii?

Glad to see the fix for pacing, I still play a lot of DX9 games. I’m also really excited to see what they do with HDMI FreeSync. They demoed it months and months ago, but not a peep since.

Speaking of G-Sync, what’s the word on that guy that starting modifying NVIDIA drivers, EDID, and registry entries to get G-Sync to work on all DP displays? I believe he was basing his work off of the leaked Asus software G-Sync driver. Last I heard, he was working on G-Tools Beta 2, then NVIDIA shut his site down or something. Someone needs to pick up that torch and bring G-Sync to all AdaptiveSync displays. Tear down this proprietary wall!

I wonder what spin will be

I wonder what spin will be put on why Gsync still needs a module, and why Gsync should still cost a lot more money now that we know this about the new Radeon drivers.

The spin will be, “Waaah,

The spin will be, “Waaah, Gsync is still bettAr and AMD sux!!!”

As was pointed out, Gsync is

As was pointed out, Gsync is still a better solution since the issues with low refresh rates are handled all the time, instead of just by a subset of monitors.

Let’s be clear: Gsync has always been the technically superior technology. The comparison between Gsync and Freesync comes down to benefits/cost.

Freesync is better, since it

Freesync is better, since it works for the same subset of monitors gsync does (those with max FPS > 2.5x min FPS) and ALSO on monitors with a lower refresh rate intervals, albeit not as well.

Gsync is just a subset of Freesync now. But hey, you just pay 200€ to not be able to use as many monitors, that’s good no ?

Actually G-Sync is still

Actually G-Sync is still superior. It works on all monitors, not just some. It goes lower. And it doesn’t take any processing power from the GPU to do what it does (because it uses external hardware). Further, since G-Sync works on more generations of cards than FreeSync, coupled with the fact that nVidia holds nearly triple the market share, that means that three or fours times as many people can use it without having to get a new card. Lastly, in quanity, the G-Sync module doesn’t cost $213/200€, it’s more like $125/117€ … at least here in the States.

Having said that, this driver is very good news for everyone. It means that RTG (Radeon Technology Group) may actually give nVidia some REAL competition in the market, which will either lower prices or drive more innovation.

Another way to look at it is

Another way to look at it is that nvidia limits the displays that are gifted the gsync treatment to those that already support a larger vrr ranges.

Freesync is more along the lines of let a thousand flowers bloom, some with more narrow ranges, some with more. Perhaps some people are willing to spend a bit less on a cheaper freesync monitors with a more narrow range if they know they have hardware that is able to stay above the minimums more often than not.

More options and choice is NEVER a bad thing, at least for people in the know. For those that want the feature, it adds another thing to look for in displays. And we as consumers should reward monitor makers that put the better tech together for gaming.

I’d just like to see a hybrid

I’d just like to see a hybrid so I don’t have to stick with just one GPU vendor.

Freesync is an open standard

Freesync is an open standard that even Nvidia can use, and Intel has already committed to using Freesync with its integrated GPU’s in the future.

So once Freesync gets good enough, it may just become the open standard that even Nvidia will end up using. That’s the good thing about AMD, and one of the reasons I support them. TressFX is also an open standard that Nvidia can (and does) easily optimize for, and many people think it looks better than hairworks.

Nvidia has tested ALL panels

Nvidia has tested ALL panels and made a list of which ones they certify and allow to be used with g-sync. They won’t allow just any panel to be thrown in less it meets their requirements to provide the experience that g-sync is ment to provide. Which is a good thing since won’t have the cheapest, random (insert no name brand panel) a monitor maker can get their hands on and throw a g-sync monitor together.

Even with how much people complain about price premium, but that premium pays for all the work that went in to making it work like it was intended. Freesync could get there in time but that time and $ will be on the makers of each monitor manufacturer to do the work themselves where as nvidia did pretty much all leg work in g-sync.

The argument for more

The argument for more expensive gsync gets weaker and weaker with time.

The last holdouts will be Allyn and Ryan doing cartwheels and back flips to find infinitesimal ways in which gsync is a superior solution.

If you or someone else had a budget, would it not be better to put that money into the core system, like upgrading a gpu to something stronger or the cpu to something better?

These calculations don’t work on the tech crowd because they get top end hardware rained on top of them, but for people with non infinite budgets, the cost and increased options of freesync will be a clear advantage.

“It works on all monitors,

“It works on all monitors, not just some.”

Really? ALL monitors? So I can go buy an Nvidia GPU and plug it into my several-year-old ViewSonic monitor and use Gsync?

Really?

It does have a performance

It does have a performance penalty though, as high as 8 fps. Freesync can have performance penalties too, but it’s much lower.

Post links to published

Post links to published benchmarks that show this performance penalty. It was said in the stream that there is no penalty, although what we’d all really like to see is PCPer is to verify this as well.

The penalty that was

The penalty that was commented about is in kepler gpu’s, Reason was the hardware in kepler wasn’t 100% VRR. There is a small bit kepler cards had to do in software where on maxwell cards has it in hardware.

And what I referred to was

And what I referred to was the part where he said “freesync can have performance penalties too” which I commented on.

Although the AMD rep did say in the stream ‘no penalty’, i’m like most people and will believe it when someone like PCPer verified if there is indeed one a penalty for LFC on AMD cards.

You didn’t point out any

You didn’t point out any positive for G-sync over F-Sync.

Wow it works on “all”

Wow it works on “all” monitors?

So my monitor with no gsync module can use gsync now? no.

Gsync is a cash grab by nvidia. (I use nvidia card’s incase you shout fanboy).

In the real world, most folks

In the real world, most folks only need to be able to use a monitor technology with a single monitor. The one sitting in front of them.

Choice is good, but throwing a comment out there about how many monitors one can “use” a certain feature with, when in reality you buy a monitor based on its feature set and then use (or not) those features, well, that is a bit disingenuous.

Superior technology? That is

Superior technology? That is highly debatable. Nvidia created g-sync and put a lot of effort into it to avoid changing the display port standard. This was the wrong way to go about it from the beginning. Free-sync makes it part of the display port standard. It is pretty obvious to me that refresh rate should be part of the standard and not implemented in a proprietary manner. It is great that Nvidia pushed the idea, but a proprietary solution is not good for consumers. They got a product out first, but the competition seems to have mostly caught up.

With AMD’s driver improvements, there is very little difference between free-sync and g-sync. The idea of going down to refresh rates lower than 9 (minimum for free-sync, I think) is ridiculous. With anything below 24, you lose the illusion of smooth motion and it turns into a slide show anyway. G-sync probably still has better over-drive to prevent ghosting though; in previous pcper reviews, free-sync displays were close, but not quite as good as the g-sync displays. In free-sync displays, this is up to the monitor makers and the companies that make the scalers for them. AMD is not involved with with making scalers, and they shouldn’t be. This is why standards exist.

The third party scalers makers and monitor makers will catch up with the overdrive performance of g-sync scalers eventually. It seems that the differences are mostly not noticeable during normal use already. It is unclear whether the better performance of the scaler in the g-sync module is actually due to the g-sync module alone. It could be due to the g-sync module and careful selection of panels for not only refresh rate range, but also for more uniform or predictable pixel response times.

G-sync will continue to be significantly more expensive than free-sync displays as long as Nvidia needs to charge more for the g-sync module than what it cost for a standard scaler. G-sync modules are currently quite expensive because they use an expensive FPGA. Even if they make a g-sync ASIC, it would probably still be more expensive than standard scalers due to low volume. Every display needs a scaler, but only a small number of proprietary gaming displays gets a g-sync module, whether it is an ASIC or an FPGA. Ignoring the low volume issues, the g-sync scaler as an ASIC would probably still need to be more expensive due to the higher complexity compared to a standard scaler with no frame buffer.

I would assume that Nvidia could support free-sync easily. If they are going to support g-sync on laptops without a g-sync module, then they will need to support frame multiplication on the GPU in addition to overdrive calculations. The question is, how long will they make their customers pay more for g-sync when they could give them almost the same experience for a cheaper price by supporting free-sync. Free-sync will probably be widely supported soon since it doesn’t require significant changes on the scaler, like adding a frame buffer. If a display maker can get a free-sync enabled scaler for almost the same price as an older scaler design, then why wouldn’t they add that feature?

I would have a hard time recommending either right now. Most people I know are not upgrading both their display and their video card at the same time, so the current vendor lock-in is quite annoying. Most people I know are on a limit budget, so I would probably have to recommend AMD cards right now since there are some very good deals. This locks them in to free-sync displays, if they want VRR.

GSync does benefit from a

GSync does benefit from a module. Note that they said in the article that Freesync still isn’t as good even with the software fix, nor is it clear if it can be as good.

Also, future GSync modules will add in other technology such as light strobing in asynchronous mode.

So the plan was basically “replace the scaler with the GSync module and add in future tech in a way that is optimized for the best experience” rather than retrofitting technology not intended for this purpose (though Freesync is getting better… good luck to them).

Also, if not clear, GSync modules aren’t needed in laptops because the screens characteristics are known thus a scaler isn’t needed. In a desktop monitor that can be paired with any number of GPU’s we need a scaler.

Uhh… I can’t see how to do

Uhh… I can’t see how to do one of the most basic things this application should do – change refresh rate. Am I blind or do I have to use Windows for this now? I have a MG279Q and I like to play some games at 144Hz instead of the 90Hz freesync.

Frame rate target target

Frame rate target target control doesn’t appear to work in Fallout 4 either.. I was excited about this but we’re going 0-2 so far..

I haven’t had a chance to try

I haven’t had a chance to try the new stuff yet, but FRTC works in 15.11.1 with Fallout 4

Fallout 4 has a 60fps limit

Fallout 4 has a 60fps limit anyways (unless you override it), so it’s all moot to me anyhow.

wow! was checking out legion

wow! was checking out legion trailer (wow latest)before and after this update!wow just wow, it is subtle but if you have good eyes ,you ll notice a huge improvement in realism

… this update made the

… this update made the trailer video look better ?

.. are you trolling us?

If he ran the trailer full

If he ran the trailer full screen in asynchronous mode he might be running at 30FPS (30Hz effectively though I’d have to read the AMD fix details again). If the monitor needed 40FPS (40Hz) to be in asynchronous mode then he’d default to VSYNC ON or VSYNC OFF mode.

So with the update he could be staying in asynchronous mode thus simply showing each frame of the video the proper time.

If he was at say 75Hz then the 30FPS would have to “fit” that (like 24Hz movies to 60Hz TV’s) which works fine at 60Hz but might not be as smooth at a non-multiple of 30.

Anyway, I think people get where I’m going with this…

I should have said it could

I should have said it could be asynchronous making it smooth versus synchronous creating judder.

Great Job Raja. Keep it this

Great Job Raja. Keep it this way.

Thanks Ryan. Great job you

Thanks Ryan. Great job you guys have done in this area of technology.

You can feel you contributed toward improving user experiences particularly with AMD.

Happy Thanksgiving.

I am glad to hear red team

I am glad to hear red team has put such a focus on these core areas. I have not owned an AMD GPU since my 5870 (primarily due to 290x bit coin mining era of stupid pricing – Not amd’s fault). About ready to give AMD another try as I never had any issues. I still remember fondly when I bought my X1950XTX back in the day for my first serious pc build.

It’s AMD fault for not

It’s AMD fault for not opening up a direct channel(one per customer) for the parts, if the Retail channel was not going to follow MSRP! That would have stopped the speculation and saved AMD’s market share!

And totally alienated their

And totally alienated their retail partners.

You’re an idiot

Well, the 380x just came out,

Well, the 380x just came out, and the 390’s a great bang for the buck card (and Gigabyte’s is only 10″, so will fit in many medium cases). And if you don’t want to upgrade now, next year will have the 16nm stuff with HBM or GDDR5X.

I highly recommend AMD cards. I used to be an Nvidia fanboy, but having tried both brands, I can say they’re both good.

I don’t understand why people are so hell-bent on supporting one company slavishly, they’re all just after your money, and if they ever become a monopoly, they’re liable to act like jerks.

Which is why I try to buy from the company with less marketshare, which is AMD right now. If it was the other way around though, I’d be buying Nvidia, of course. I’m not stupid, I know any company will probably screw me over if it becomes a monopoly.

Crimson broke Rocket League.

Crimson broke Rocket League. Now Fury X stutters in a game that should run on a graphing calculator. LOL

Let’s see how many Linux

Let’s see how many Linux based OS questions get answered, and Also Vulkan questions! I’ll be looking for AMD to produce some Vulkan demos shortly or things will not look so good for AMD. That Steam OS compatibility needs to be addressed, I’m not going to windows 10, I’m staying with 7, and migrating to Linux!

Windows-10 DX12 is the curent

Windows-10 DX12 is the curent and future of Games/gamers. If you want to play the latest DX12 games, then you need Windows-10 for DX12 KK!

Does freesync work with

Does freesync work with eyefinity?

And does it work in non-full

And does it work in non-full screen mode now?

Curious to see other DX9

Curious to see other DX9 titles benefiting from Frame Pacing.

Did AMD ask you to cover Skyrim or did you pick it because it was on your benchmark suit? At any rate, there are some great taxing DX9 games like the Witcher 2. Can you bench that game as well?

Skyrim is one of the most

Skyrim is one of the most popular dx9 titles that keep getting visual improvements with mods. Makes sense to keep including it until bethesda releases a replacement.

The main other dx9 games people play are mobas… and those are not exactly performance hogs.

Cheers for AMD and PCPER

Cheers for AMD and PCPER

Cheers for AMD and PCPER

Cheers for AMD and PCPER

love this new Crimson Idea

love this new Crimson Idea for drivers, and the option to customize each game.

i like the integration.

i like the integration.

THe GIU is really nice.

THe GIU is really nice.

Yeah? You like having huge

Yeah? You like having huge ads in your control panel?

Two clicks.

That’s all it

Two clicks.

That’s all it took to make the ads go away. Two clicks.

Is that too hard for you?

This is REALLY cool!

This is REALLY cool!

hey pcper / community

this

hey pcper / community

this might be a good area for ya’ll to test.

my monitor Acer XG270HU is only showing a FreeSync range of 40 to 60…

Anybody else experiencing wrong information here?

-nagus

nevermind found my answer

nevermind found my answer here

https://www.reddit.com/r/Amd/comments/3u9m6v/lost_40144hz_freesync_range/

Looks like the crimson driver resets your windows refresh rate which you need to bump back up to 144hz.