Ken and I have been refreshing our Google search results ever since seeing the term 'VROC' slipped into the ASUS press releases. Virtual RAID on CPU (VROC) is a Skylake-X specific optional feature that is a carryover from Intel's XEON parts employing RSTe to create a RAID without the need for the chipset to tie it all together.

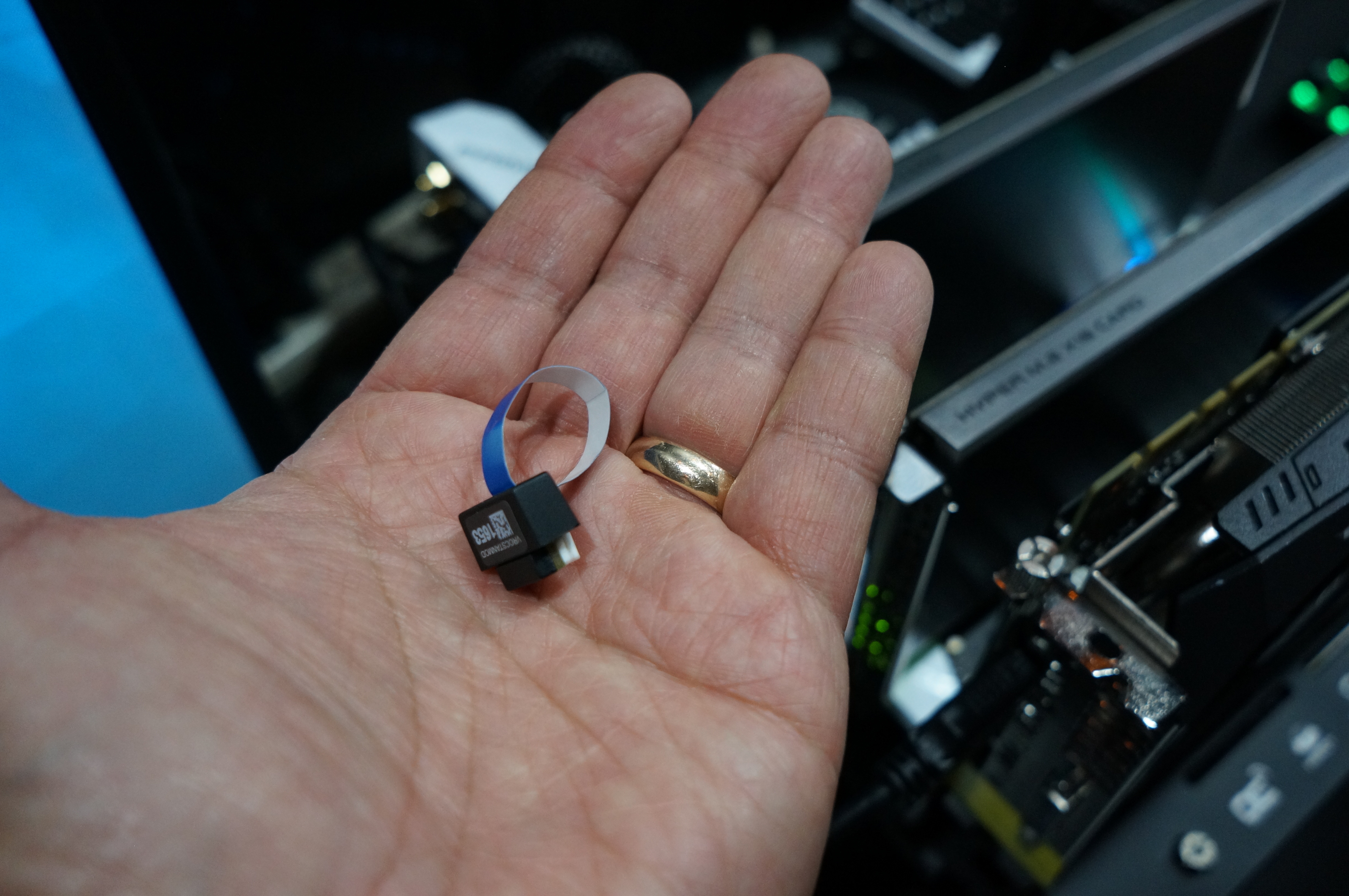

Well, we finally saw an article pop up over at PCWorld, complete with a photo of the elusive Hyper M.2 X16 card:

The theory is that you will be able to use the 1, 2, or 3 M.2 slots of an ASUS X299 motherboard, presumably passing through the chipset (and bottlenecked by DMI), or you can shift the SSDs over to a Hyper M.2 X16 card and have four piped directly to the Skylake-X CPU. If you don't have your lanes all occupied by GPUs, you can even add additional cards to scale up to a max theoretical 20-way RAID-0 supporting a *very* theoretical 128GBps.

A couple of gotchas here:

- Only works with Skylake-X (not Kaby Lake-X)

- RAID-1 and RAID-5 are only possible with a dongle (seriously?)

- VROC is supposedly only bootable when using Intel SSDs (what?)

Ok, so the first one is understandable given Kaby Lake-X will only have 16 PCIe lanes direclty off of the CPU.

The second is, well, annoying, but understandable once you consider that some server builders may want to capitalize on the RSTe-type technology without having to purchase server hardware. It's still a significant annoyance, because how long has it been since anyone has had to deal with a freaking hardware dongle to unlock a feature on a consumer part. That said, most enthusiasts are probably fine with RAID-0 for their SSD volume, given they would be going purely for increased performance.

The third essentially makes this awesome tech dead on arrival. Requiring only Intel branded M.2 SSDs for VROC bootability is a nail in the coffin. Enthusiasts are not going to want to buy 4 or 8 (or more) middle of the road Intel SSDs (the only M.2 NAND SSD available from Intel is the 600p) for their crazy RAID – they are going to go with something faster, and if that can't boot, that's a major issue.

More to follow as we learn more. We'll keep a lookout and keep you posted as we get official word from Intel on VROC!

Are these Windows

Are these Windows limitations, Intel limitation, or what?

It seems that you’d have to go out of your way to make something like this non-bootable. All you need to do would be to make a small partition on one drive to boot the system and then the rest could be brought up later.

Intel limitation, and when it

Intel limitation, and when it comes to M.2 devices connected directly to the CPU, to get a single bootable RAID working takes some serious doing on the BIOS/CPU side of things.

That’s why I suggest having a

That’s why I suggest having a small non-RAID bootable partition to load things from. This is how Linux does things. Cannot Windows do this?

Also, since this depends on Intel’s Windows RST driver, it seems this is more a limitation for Windows. I’d be cuirous how Intel would stop Linux from RAIDing a bunch of these drives as all Linus needs to see from the hardware if JBOD.

You are thinking that

You are thinking that bootable partition only means Windows Installation Files and nothing else. Bootable RAIDs allows you to have Windows, and also any other software like Visual Studio, SQL Server, GPU rendering softwares, data and any other software that you are planning to run for performance.

All these things work together in the BOOTABLE Raid with optimal performance without DMI 3.0 bottleneck issues as they bypass it and directly talk to CPU.

If you only have Windows installation partition on RAID – then you are saying that rest of the software that you install on C: drive will not benefit from that RAID. That solution only improves your Windows Performance.

Without BOOTABLE RAID – you need to have one normal non-bootable RAID for all your software, data for performance and redundancy – and the rest of Windows on another BOOTABLE RAID array for its own performance. And I don’t know how it will work if your softwares are doing system calls for GPU acceleration which has to talk to CPU, GPU and it might bottleneck DMI 3.0.

That’s very disappointing, I

That’s very disappointing, I hope there’s just some misunderstanding. If 100% true though, this is more disappointing than JROC’s rap career.

I suspect that it will be

I suspect that it will be compatible with more than only Intel SSDs, but unconfirmed at this point.

If I understand this

If I understand this correctly, this Hyper M.2 card supports only NVMe drives right? Then meh…

I would seriously consider it for normal AHCI devices. Don’t care about RAID, but for drive pooling this looks like gold without the hassle of cabling and RAID/HBA controllers.

A few AHCI devices won’t be

A few AHCI devices won’t be bottlenecked by normal, chipset-powered M.2 ports.

You missed the point. Show me

You missed the point. Show me the motherboard with 20 SATA ports. 🙂

It would be nice and clean solution without meters of cabling for 20 drives.

If you want a bunch of SATA

If you want a bunch of SATA drives and have PCIe slots free, just but a freaking SAS card and hook stacks of drives onto each. A dual-16G SAS card and a couple of expander backplanes will give you as many SATA drives as you want with a pair of cables, and a dual-SAS card with basic breakout cables will still give you 8 drives per card (and those are normally 2x or 4x cards, so you could use m.2 to PCIe adapters and put four on one 16x slot).

There you

There you go:

http://www.asrock.com/mb/Intel/Z87%20Extreme11ac/

I would like to know if VROC

I would like to know if VROC also supports Optane (i.e. has all the capabilities of RST but on the CPU) without needing to to through the PCH. X299 has been announced to support Optane Memory, but using an Optane cache drive could be more convenient in some circumstances (e.g. ASRock’s ITX board).

It should because Optane

It should because Optane Memory presents as a standard NVMe device.

I am aware, but the

I am aware, but the ‘transparent caching’ of Optane is different from any normal RAID configuration. X299 already exposes only a subset of RSTe’s capabilities by default, so it may be that Optane caching with devices not connected via the PCH is either not present, disabled entirely, or contingent on a dongle as with RAID level capability.

IIRC in the enterprise sector Intel are only pushing the Optane Memory for caching, not the Optane m.2 drive, so there may not even be an RSTe Optane implementation to pilfer for consumer use.

I know it might be just me,

I know it might be just me, but if I’m doing a crazy RAID-0 setup, I’m not going to be booting from it anyway, considering it’s probable that I won’t see much of a real world performance difference past a certain threshold.

I would likely consider something like this for my home VMWare lab, however. I use Workstation most of the time, but for users running ESXi, they’re probably booting from a USB key.

I’m getting decent performance with several simultaneous VMs running on an Intel 750 Series SSD (1.2TB) that is just for data (booting from a Samsung 950 Pro) with an Oracle Database being hit pretty hard, along with a mix of Windows and Linux guests, but the increased core availability on Skylake-X, coupled with massively scalable PCIe SSD RAID would make for one killer workstation setup.

What a ripoff that it will

What a ripoff that it will only work with Intel m.2 SSDs, after all the Intel 600P is one of the worst performers, in some benchmarks it actually performs worse than some SATAIII SSDs. I suppose then, for those of us that want real performance, we’ll have to go with something like this which is the same exact concept, but with Samsung 960 Pro NVMe SSDs so it is capable of reach 13.5GB/s sequential reads and 8GB/s sequential writes.

That’s not exactly what they

That’s not exactly what they stated. It will work with non-Intel SSDs, you just won’t be able to boot from it.

Gee, how many vendors have

Gee, how many vendors have now attempted an

x16 AIC solution? Let me count the ways:

HP

Dell

Kingston

Intel

Supermicro

Highpoint

Broadcom

ASUS

Serial Cables

Still waiting for a bootable RAID-0 solution

i.e. one that is not proprietary and does not

require a hardware “dongle”.

p.s. One of my contacts at another Forum

contacted Highpoint, and they are working

on making their SSD7101A bootable.

Please contact them yourselves,

to prove to Highpoint our interest

in this functionality.

I mentioned the Highpoint

I mentioned the Highpoint NVMe RAID SSD in my post just two spots before yours….just wanted you to personally know that I thought of it and mentioned it first, haha

You’re right! I didn’t click

You’re right! I didn’t click on “this” link. 🙂

> it is capable of reaching 13.5GB/s sequential reads and 8GB/s sequential writes.

I believe that Highpoint may actually be able to

come very close to that prediction of 13.5 GB/s.

Time will tell. Here’s why.

Computing what we call MAX HEADROOM (max theoretical bandwidth), we get:

x16 PCIe 3.0 lanes @ 8 GHz / 8.125 bits per byte =

15,753.6 MB/second

(PCIe 3.0 uses a 128b/130b “jumbo frame” = 130 bits / 16 bytes per frame = 8.125 bits per byte.)

A few scattered measurements of the Samsung 960 Pro

in RAID-0 arrays are showing an aggregate controller

overhead of about 10%, which is excellent.

Thus, 15,753.6 x 0.90 = 14.178 GB/s

which is very close to 13.5 GB/s.

If Highpoint can deliver 2 x SSD7101A installed in

the same motherboard, particularly if that config

is also bootable, it is reasonable to expect the

latter prediction to almost double.

A colleague contacted Highpoint and they told him

that they are working on making that AIC bootable!

I’ve triple-checked their math, and it appears to be correct.

So, we need to get a review unit of the SSD7101A to Allyn,

to test with 4 x Optane M.2 SSDs!

If the Samsung 960 Pro works in the SSD7101A,

then the NVMe M.2 Optane should also work in that AIC.

NOTE WELL what Allyn wrote

NOTE WELL what Allyn wrote above, repeating:

“It should because Optane Memory presents as a standard NVMe device.”

The latter is a very important spec, imho.

Let’s hope the 2.5″ U.2 Optane also presents as a standard NVMe device.

@Allyn – When I first saw the

@Allyn – When I first saw the “Hyper M.2 X16 card” from Asus, I immediately thought about using 2 x “Asus U.2/M.2 Hyper Kit Card” and having each of these U.2/M.2 connected to an Intel 750 U.2. Do you think that would be possible?

brief review with more photos

brief review with more photos here:

http://www.guru3d.com/news-story/computex-2017-vroc-technology-passing-10-gbsec-with-m2.html

Looks like Iometer measured 13,212.85 MB/second.

Presumably, that measurement was done with

4 x NVMe M.2 SSDs in RAID-0.

Scroll down for a photo gallery:

one of those photos shows two of these units

installed in a single motherboard.

p.s. I’m starting to wonder if these AIC vendors

are running into patent rights claimed by certain

competitors. I have no other explanation for the

fact that a general-purpose x16 NVMe RAID controller

is still not available (see partial list of vendors

in a Comment above).

Gordon Mah Ung et al. come

Gordon Mah Ung et al. come down pretty hard

on this VROC implementation:

https://www.youtube.com/watch?v=gLWanKZhCeo

EDIT: Gordon again, not so hard this time:

http://www.pcworld.com/article/3199104/storage/intels-core-i9-and-x299-enable-crazy-raid-configurations-for-a-price.html

VROC is a scam. Without the

VROC is a scam. Without the key, you cannot create a bootable RAID0 array, which makes the whole thing pointless and just a money grab.

At this point, having spent HOURS trying to get a Gigabyte Aorus X299 Gaming 7 motherboard configured to work with 3 512gb Samsung 960 Pro nvme drives, I can say this:

1. Third M.2 slot unrecognized. Maybe broken, but I don’t think so. Manual mentions RAID only working on that slot with VROC key… in our case, no drive was recognized in that slot (yes, we shuffled them around), ever. I bet an Optane would have been recognized. This is Intel “breaking” hardware.

2. I managed to get a RAID0 array configured somehow (BIOS settings led to inconsistent results), but Windows 10 1607 Install did not recognize it as a bootable partition. Reading between the lines, VROC-disabled RAID does not support bootable RAID0 arrays unless using Optane drives, or possibly when you have a VROC key installed.

3. So we were left with two individual M.2 slots “working”, one to boot, one as a data drive, and a perfectly working Samsung 960 Pro drive unable to be used. All the while, still strange things going on with the BIOS configurations and menus (NVME menu never showed up, for example)

4. You cannot buy a VROC key right now (at any price, let alone the $99 expected) and Intel really has some explaining to do, as does anybody who markets one of these X299 motherboards and advertises “3X M.2 RAID” because it is false advertising.

My conclusion is that Intel refuses to recognize the third slot unless it is an Optane SSD or a VROC key is in place. Intel has placed way too much limitations on their VROC scheme, and as it currently stands, it is literally a huge negative for any motherboard that has it. Stay away from X299 boards. VROC is a scam.

For reference, I’ve been building systems for 30 years; my current system is a Gigabyte Z170X Gaming 5 running 2 Plextor M.2 nvme drives in RAID0 as a boot drive. The X299 could have been a home run, but in my eyes, it is an utter failure, thanks to Intel’s greedy nonsense.

Small correction to the above

Small correction to the above post: All three slots do work, we apparently have a DOA Samsung 960 Pro stick. Still, the third slot only works in RAID-0 with a VROC key, according to the manual, and the bad drive led to some confusion in the slots on how they work. It now makes sense that we could not create an (unbootable) RAID0 array when the bad stick was in one of the other two slots – it looked more inconsistent when we thought all three drives were good.

Hi Ben Jeremy,

Since I’m

Hi Ben Jeremy,

Since I’m about to receive the Gaming 7 mobo and two 960 Pros I plan on putting in a Raid 0, can you clarify if this is limited to 2 of the slots on the board?

Since my drives are not Intel and I do not have the VROC chip, am I limited to having these two 960s in a non-bootable raid 0 and then have an additional drive serve as my Windows boot drive?

Thanks,

Marko

Yes, I believe that

Yes, I believe that configuration – a 2-drive RAID-0 array as a DATA drive, and a single NVME stick as a boot drive – would work without the VROC key.

Needless to say, Gigabyte’s tech support has been disappointing so far on this issue. I’m hoping to at least shame them into sending us a VROC key to test.

This motherboard, with a Samsung 960 Pro as a boot drive boots up in an eye blink, though. Still, there is the principle of the thing; and Intel’s promise of incredible performance running nvme sticks in RAID (no bottlenecks).

Hey Ben,

Thanks for the

Hey Ben,

Thanks for the reply!

Let me know if you’d had the chance to try booting it off a pair of your 960s in a RAID 0.

Thanks,

Marko

My Laptop does not support

My Laptop does not support booting from the m.2 NVMe drive.

So I set it up to boot from a SATA with an EFI script that finds the windows boot loader on my NVMe drive. It then loads and runs it, so windows boots from the NVMe drive.

It’s fast and works like a charm for more than a year now.

So there shouldn’t be a problem to do the same and run the vROC RAID-0 windows boot loader and get the full thing without paying Intel a dime.

Honestly, with Optane landing

Honestly, with Optane landing soon (if it hasn’t already), I don’t see much wrong with the Intel SSDs only part. As long as Intel has the fastest SSDs on the market, then it doesn’t matter. I will only oppose that part if Intel doesn’t stay on top of the SSD market.