The attempt to describe the visual effects Jensen Huang showed off at his Siggraph keynote is bound to fail, not that this has ever stopped any of us before. If you have seen the short demo movie they released earlier this year in cooperation with Epic and ILMxLAB you have an idea what they can do with ray tracing. However they pulled a fast one on us, as they were hiding the actual hardware that this was shown with as it was not pre-rendered but instead was actually our first look at their real time ray tracing. The hardware required for this feat is the brand new RTX series and the specs are impressive.

The ability to process 10 Giga rays means that each and every pixel can be influenced by numerous rays of light, perhaps 100 per pixel in a perfect scenario with clean inputs, or 5-20 in cases where their AI de-noiser is required to calculate missing light sources or occlusions, in real time. The card itself functions well as a light source as well. The ability to perform 16 TFLOPS and 16 TIPS means this card is happy doing both floating point and integer calculations simultaneously.

The die itself is significantly larger than the previous generation at 754mm2, and will sport a 300W TDP to keep it in line with the PCIe spec; though we will run it through the same tests as the RX 480 to see how well they did if we get the chance. 30W of the total power is devoted to the onboard USB controller which implies support for VR Link.

The cards can be used in pairs, utilizing Jensun's chest decoration, more commonly known as an NVLink bridge, and more than one pair can be run in a system but you will not be able to connect three or more cards directly.

As that will give you up to 96GB of GDDR6 for your processing tasks, it is hard to consider that limiting. The price is rather impressive as well, compared to previous render farms such as this rather tiny one below you are looking at a tenth the cost to power your movie with RTX cards. The card is not limited to proprietary engines or programs either, with DirectX and Vulkan APIs being supported in addition to Pixar's software. Their Material Definition Language will be made open source, allowing for even broader usage for those who so desire.

You will of course wonder what this means in terms of graphical eye candy, either pre-rendered quickly for your later enjoyment or else in real time if you have the hardware. The image below attempts to show the various features which RTX can easily handle. Mirrored surfaces can be emulated with multiple reflections accurately represented, again handled on the fly instead of being preset, so soon you will be able to see around corners.

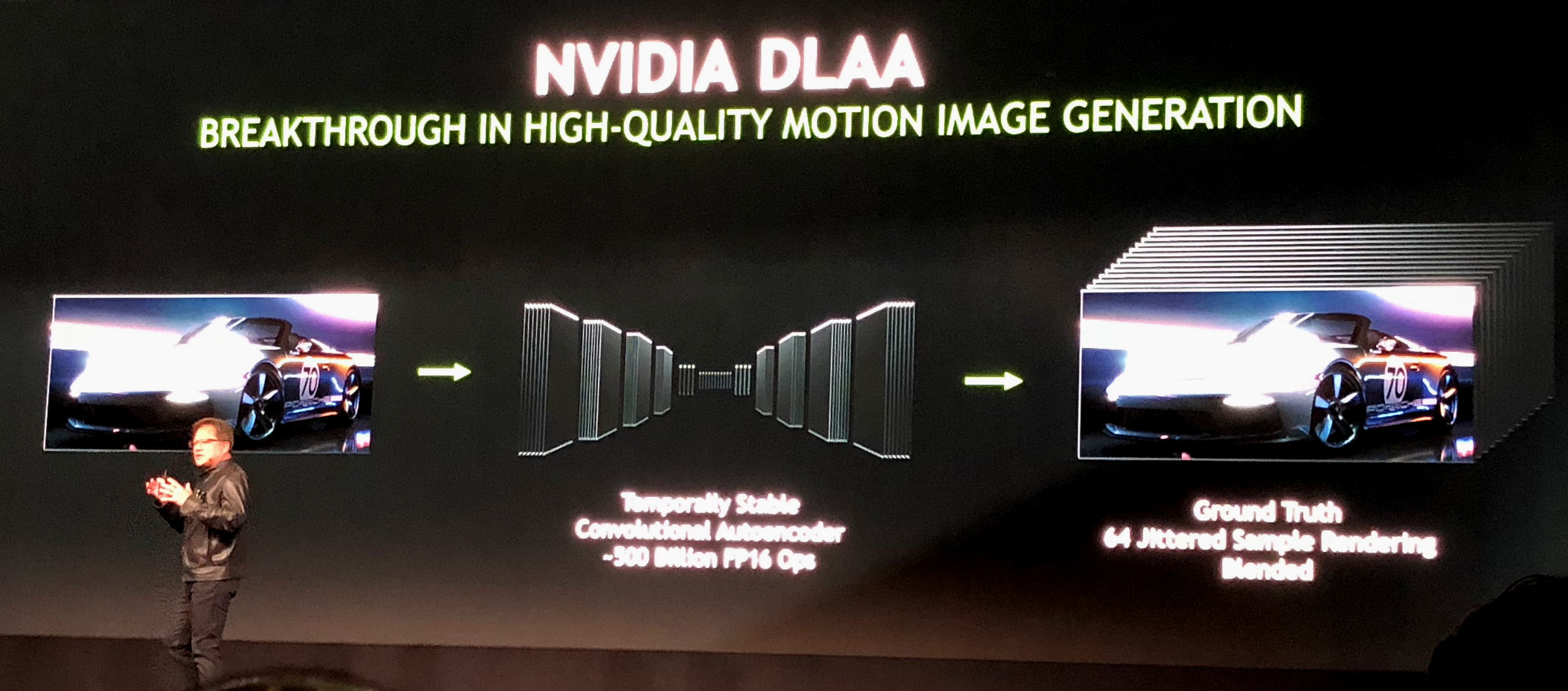

It also introduces a new type of anti-aliasing called DLAA and there is no money to win for guessing what the DL stands for. DLAA works by taking an already anti-aliased image and training itself to provide even better edge smoothing, though at a processing cost. As with most other features on these cards, it is not the complexity of the scene which has the biggest impact on calculation time but rather the amount of pixels, as each pixel has numerous rays associated with it.

This new feature also allows significantly faster processing than Pascal, not the small evolutionary changes we have become accustomed to but more of a revolutionary change.

In addition to effects in movies and other video there is another possible use for Turing based chips which might appeal to the gamer, if the architecture reaches the mainstream. With the ability to render existing sources with added ray tracing and de-noising features it might be possible for an enterprising soul to take an old game and remaster it in a way never before possible. Perhaps one day people who try to replay the original System Shock or Deus Ex will make it past the first few hours before the graphical deficiencies overwhelm their senses.

We expect to see more from NVIDIA tomorrow so stay tuned.

I want one. If that crypto

I want one. If that crypto thing ever goes back to what it was in december, I am getting one.

I’m a bit dubious as to how

I’m a bit dubious as to how hard they’re hammering on performance improvements that are, at present, meaningless for gamers.

Case in point: Turing is 6X faster at Ray tracing with DLAA than Pascal. Result! Except PAscal wasn’t designed for that, nothing uses it yet and it’s entirely unclear how long such things will take to transition to actual game engines.

There’s at least APIs out

There’s at least APIs out there for Ray Tracing now, and I believe Unreal at least supports some form of Ray Tracing. The components are there, it’s just a matter of performance.

How do you know the hardware

How do you know the hardware used for the raytracing is meaningless for games?

what exactly is the hardware function doing. do You think there is a new special multiplicator that only works for raytracing?

Most likely there is very very little or more likely no hardware that is only used for raytracing.

It’s called RT cores:

“…

It’s called RT cores:

“… these RT cores will perform the ray calculations themselves at what NVIDIA is claiming is up to 10 GigaRays/second, or up to 25X the performance of the current Pascal architecture. …”

RT cores – Ray Tracing cores, while I can’t say that they won’t be used for something else, as far as I undderstand they were specifically developed for Ray Tracing, hence the name.

10 gigarays per second is

10 gigarays per second is only an approximation of the many many quintillions(1 * 10^18 US) of photons per second that fly around in the average environment. And those 10 gigarays of processing power is mostly going to be taken up calculating ray reflections, refractions, ray bounces, and that’s not much rays generated for high FPS where that’s milliseconds of time available per frame so Nvidia, or AMD for that matter, will have to use those rays sparingly for things like shadow casting, reflections/refractions and mix that in with the raster pipline output. So Nvidia has begun to use AI methods in order mix down the AO/AI and Ray Traced shadow calcuation results in order to resample/smooth the grainy ray sample results of limited numbers of rays, and 10 gigarays is a small sum compared to real world photon counts per second(1).

Both Nvidia and AMD has access to the Ray Tracing libraries so they both can achieve similar results and more information is needed on Nvidia’s ray tracing IP functional blocks to see of that IP is any different from AMD’s compute shader IP that’s in hardware and available for any kids of calculations.

How many Rays calculations per second can AMD’s Vega compute on its nCU compute cores Vega 56 has 4096 shader cores so there needs to be some metrics on that for comparsion. Nvidia needs to make with the detailed whitepapers and at least Nvidia is rather good at publishing whitepaper quality material, while AMD is not so good with the amount of whitepapers but they are getting better lately.

“So, in order to emit 60 Joules per second, the lightbulb must emit 1.8 x 10^20 photons per second. (that’s 180,000,000,000,000,000,000 photons per second!)” (1)

So that’s a lot more rays from reality and those individual atoms are faster than femtosecond fast with their analog calculations that produce photons of many different wavelengths by the billions of quintillions, atoms produce reflections, refractions, other effects PDQ also. And I’ll bet that Nvidia uses hundreds of its Volta based Tesla GPUs in order to do that training that then produces the trained AI that’s loaded into those Tensor Cores to run the ray sample degraining/resampling for those Quadros. Nvidia does not have a monopoly on Tensor Cores and Tensorflow libraries can also be run on older Nvidia and AMD GPUs via OpenCL and Cuda.

I’m looking forward to Nvidia’s whitepapers to see if any of Imagination Technologies’ IP was licensed and maybe AMD has also been looking at that IP also, or maybe there is some in house IP utilized. Maybe more will be Known at Hot Chips this year or next year at the latest.

(1) [See problem 2]

“Astronomy 101 Problem Set #6 Solutions”

https://www.eg.bucknell.edu/physics/astronomy/astr101/prob_sets/ps6_soln.html

Edit: Vega 56 has 4096

TO:

Edit: Vega 56 has 4096

TO: Vega 64 has 4096

Yes, the hardware is

Yes, the hardware is optimized for that task. If it weren’t, why wouldn’t they mention that? It would be a pretty killer feature.

I should add that I have absolutely no problem with a company releasing features that aren’t going to be used for a while. AMD did that for years (tessellation being the big one). I’m more concerned that there’s not really any indication that the standard shading units are much faster. But hey, leaked benchmarks say otherwise so we’ll see 🙂

Ray Tracing calculations are

Ray Tracing calculations are a compute oriented task so any GPU that can do Compute workloads fully in the GPU’s hardware on the GPU’s shader cores is fully ready for Ray Tracing workloads more efficiently. If your GPU supports OpenCL then that GPU can already do Ray Tracing workloads, Ditto for CUDA.

So Nvidia’s Ray Tracing cores need some whitepapers published so their functionality can be compared to already existing compute shaders on other makes/models of GPUs. CPUs cores were the orginial way to perform Ray Tracing calculations and CPUs and GPUs can both be used for ray tracing workloads. Any fully on GPU compute hardware is going to make Ray Tracing mixed with rasterization a more efficient task just look at IT’s PowerVR Wizard Ray Tracing hardware IP for its GPUs.

Vega’s Explicit Primitive shaders are not being used much currently and that’s partally because so many news sites reported that AMD had canceled Primitive Shaders instead of reporting that AMD actually only canceled Implicit Primitive Shaders, and Not Explicit Primitive Shaders. So that interest effectively disappeared for any types of primitive shader usege. But also Programming for any new GPU feature set, if it’s not an industry standard feature set is costly, because of the limited amount of devices making use of that technology.

Maybe that Chinese Semi-Custom Zen/Vega APU will see developers starting to become more interested in making use of Vega’s Explicit Primitive shaders in order to get the most performance out of that Semi-custom APU’s limited amount of Vega nCUs/resources. Console titles are more optimized because of the consoles’ limited Graphics hardware resources so that market will spend what is necessary to sell their brand of console/closed console ecosystem. And in the console market it’s the closed ecosystem that makes the money and not the console hardware as much. But the console makers will invest more in gaming software optimizations because every console sold generates a continous revenue stream via that closed console gaming ecosystem.

For PC gaming, that industry appears to be just letting the GPU hardware makers solve their gaming software’s performance problems by throwing out more powerful GPU hadrware at that inefficient gaming software problem to solve any of PC gaming’s performance issues. So PC gaming title makers are saving millions by just letting the GPU makers continue to throw more powerful GPU hardware at the problem rather than optimize for the hardware features that already exists in the current generation of GPUs. And the GPU makers will gladly keep selling their more powerful hardware to solve the problem because that means more upgrade sales while the PC gaming software makers can continue to save millions by not having to pay the programming costs to fully optimize their PC gaming titles.

Console Gaming will be more Fully going over to utilizing Zen/Vega for XBOX, PlayStation, and That Chinese Semi-custom Console APU that’s the first to make use of fully Zen/Vega APU IP. And I’m sure that many Console game makers will be getting that Chinese Console’s development platform to get a headstart in console games development for Future XBOX, and PlayStation consoles that will definitily be making use of Zen/Vega and Zen/Navi. And Navi will probably have some of the vary same Vega type feature sets in addition to any newer feature sets for Console gaming. AMD may just be using console development/R&D funded by the console makers to help offset development costs for PC oriented gaming GPUs, which is not a bad Idea considering AMD’s limited funds relative to Nvidia for gaming oriented GPU SKUs.

Where Turing does have an immediate advantage is the Tensor Cores and an already trained and ready AI oriented denoising done on Nvidia’s Tensor Cores that will be quicker that any CPU/GPU accelerated denoising methods. So that means that even with the limited amounts of Rays Available, Nvidia’s Turing GPUs will be able to resample that grainy Ray Traced output mixed down with some raster output to get a better overall AA/AO and global illumination affects on its Turing based Gaming offerings. Nvidia will be doing the AI Training on its vast Volta/Tesla farms and then that trained denoising AI will be loaded into the Turing GPU’s Tensor cores, and Nvidia will be able to continuously reoptimize that AI/Denoising on those Teslas to fruther refine that AI/Denoising efficiency.

Its like saying a CPU can do

Its like saying a CPU can do rasterizing already.

But its not optimized for it. (Same goes with encryption, all CPU can do it, but CPU with HW assist are an order of magnitude better at it)

So having ray/triangle intersection is not cheap to do via an opencl function, having the intersection done in HW is also massive.

Their is also some BVH support, but I cant comment on that.

The shading portion would be equal, but the scene traversal and intersection acceleration will allow for game engines to move to ray tracing for a lot of its scene features.

Turing is a massive step forward (not just from its ray engine)

I dont believe AMD got any new features for its 7nm GPU beside a die shrink.

The RTX 2080 I think will be a massive hit. I think the review will relegate Vega to possibly the 2060 class gaming cards.

I also think nvidia will sell out of ALL its RTX lineup. including the $1080ti.

Guys, the captcha on this

Guys, the captcha on this site is getting a bit annoying. I understand why it’s there, but does it need to be there for ppl with registered accounts too? I feel like i’m training a neural network every time I want to post here, and frankly it has stopped me from posting.

I complained about that on

I complained about that on another thread. Desktop version is a smpmle check box, wait about 2 – 4 seconds and it’s done, only sighty annoying.

Mobile version on the other hand is, well to put it in terms to convey just how bad it is, IT SUCKS BALLS, DICKS, CHODES, A-HOLES AND ALL OF THE HAIR SURROUNDING THAT NETHER REGION!!!

Seriously, if this is to combat “bots”, I still stand by “get rid of the anonymous” accounts, and hence this problem of random bot/shit posts gets greatly reduced.

@PcPer, if you are able to change the mobile captcha version to do the same thing as desktop, then that might suffice for awhile.

Well I get the “street signs”

Well I get the “street signs” stuff also on the desktop, but not always, sometimes I click the “i’m not a robot” and it lets me pass without paying for admission.

Funny enough, when you deliberately don’t click on the signs or cars, it let’s you pass aswel.

That the

That the reCAPTCHA(ReCrapAtYa) using you for Infrencing Testing for their nefarious reasons when you get those long ReCrapAtYa sessions. It’s not hard to find articles on what is going on there. You may even be tasked with helping the Google folks with identifying street view signage/text so Google can save money on processing costs.

It can also be used for censorship so any bad product responses can be discouraged. Google’s search AI has become master at deemphasizing any negative content search results of any compainies’ products that are big Google Ad clients. It’s vary nefarious and insidious process.

They need to use something other than street signs that are captured only via street view, and it’s debatable just what constitutes a “Street” sign in reCAPTCHA! ReCrapAtYa also makes use of cookies to profile humans against bots, but Cookies are nefarious by nature for an ad spaffing interest such as Google(Largest Ad Spaffers on the Planet).

Sign, sign, you must ID a sign

Blockin’ out my posting, breakin’ my mind

Do this CAPTCHA, don’t choose that, can’t you choose street signs?